Maximize ROI with Flexinfra’s AI-Stack

Our professional team will handle the complex GPU infrastructure, so you can focus on

training and optimizing your AI models.

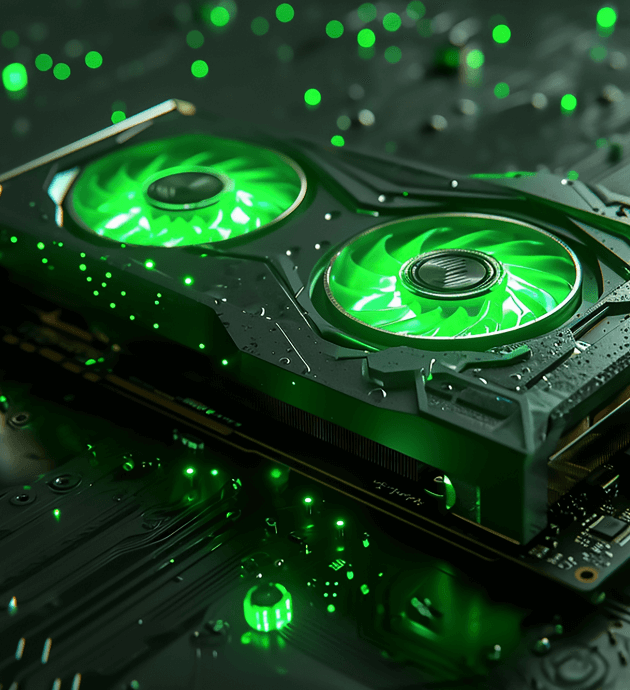

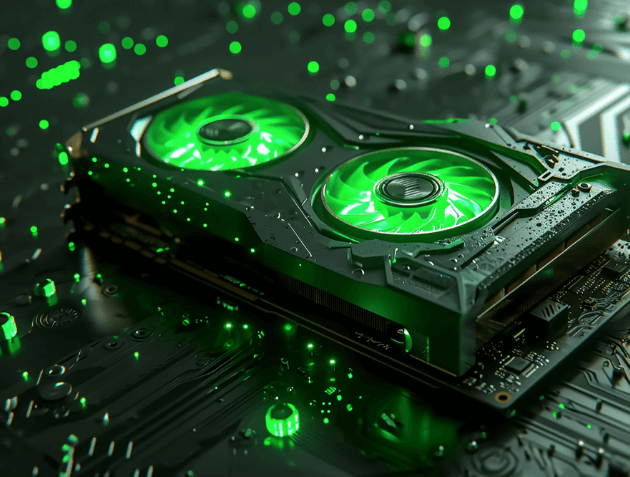

10X Performance in

Private AI

Drive efficiency with cheaper cost,

Better-performance computing

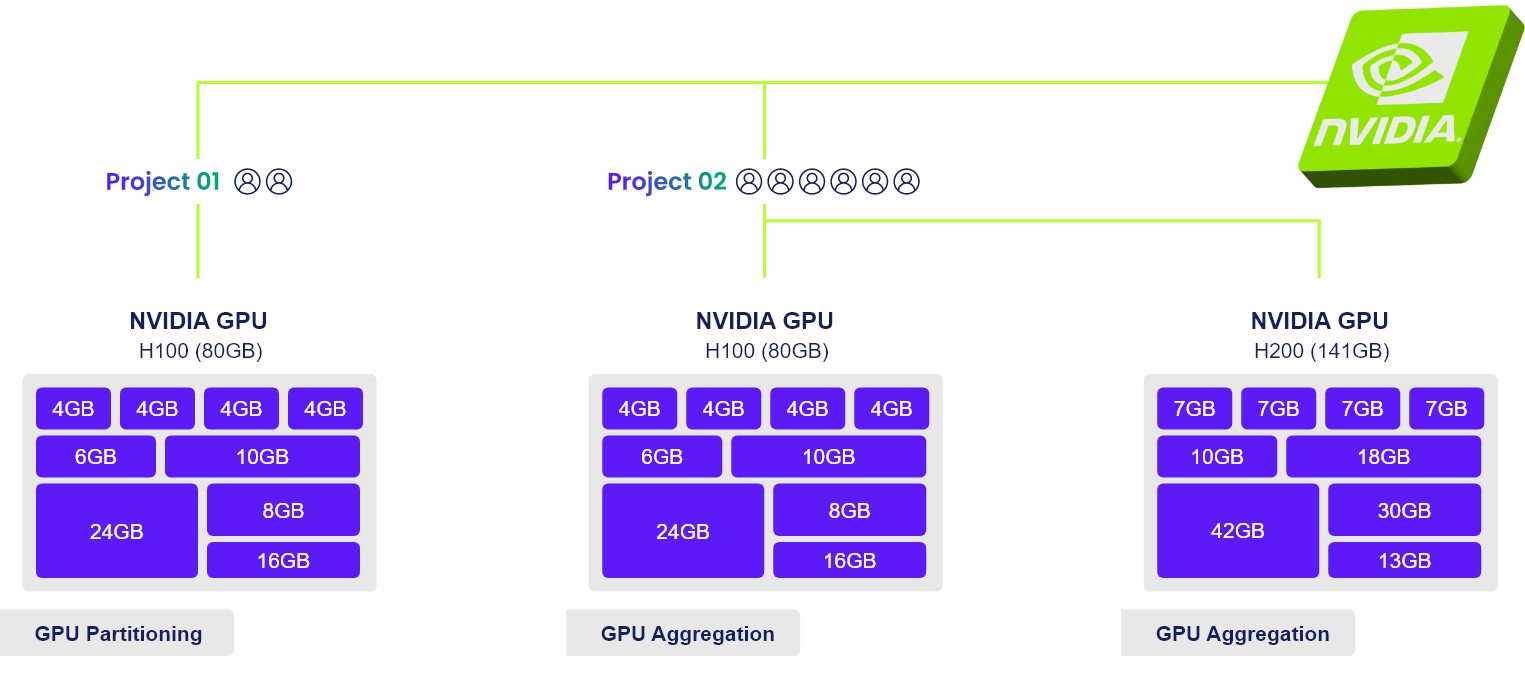

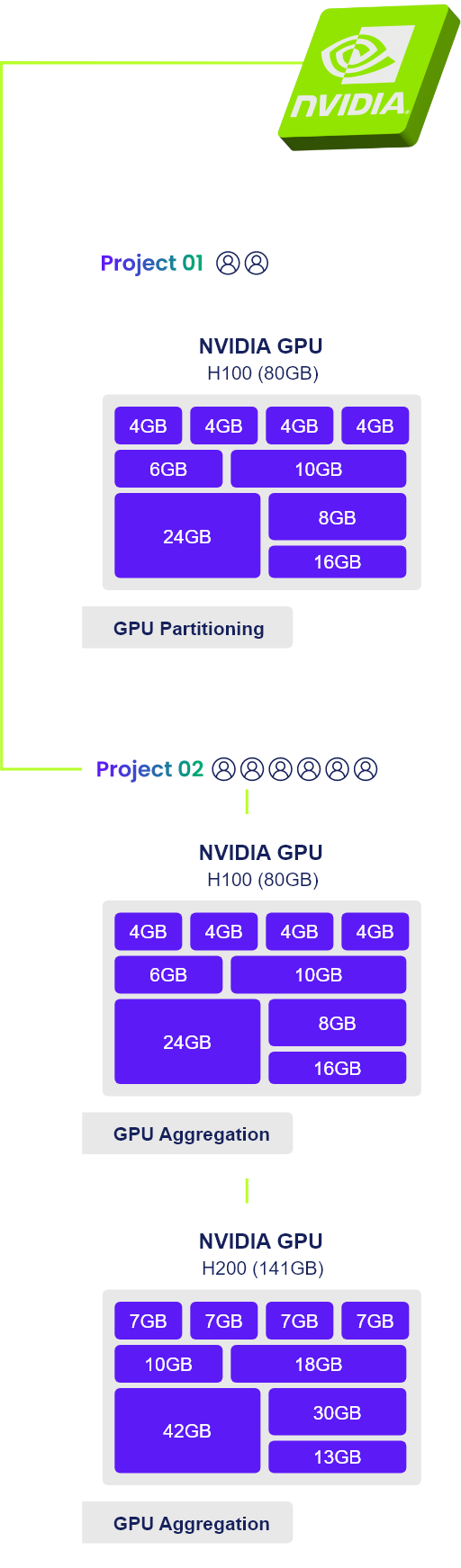

Scalable Scheduling for Effortless Management of AI Infrastructure & GPU Resources

AI-Stack is an AI infrastructure and GPU computing power scheduling platform designed to help enterprises and teams build, scale, and deploy AI services. Its main goal is to provide highly flexible, scalable solutions that can address the various needs and challenges faced when developing AI applications, such as computational power management, deployment, and optimization.

Deploy AI at Scale with

GPU Slicing for Better Performance

AI-Stack leverages third-generation innovative technology to flexibly allocate GPU resources based on workloads, enabling independent operation of partitioned GPUs without interference, ensuring efficient utilization of computing power, eliminating idle time, and optimizing resource scheduling 24/7 – ultimately reducing operating costs.

Value and Benefits of Using AI-Stack

Comprehensive AI Infrastructure Resource Optimization

- AI-Stack provides a centralized platform that enables enterprises to manage multiple AI workloads, including data processing, model training, and deployment, all in one place.

- This simplifies operations by reducing the complexity of managing different tools, frameworks, and resources in separate systems.

- One of the primary benefits of AI-Stack is its ability to efficiently schedule and allocate GPU resources, ensuring that computational power is used optimally.

- It helps avoid bottlenecks and resource wastage, reducing downtime and speeding up the AI development cycle.

- AI-Stack is highly scalable, meaning it can grow with the needs of an organization. Whether you are working on a small-scale AI project or an enterprise-level deployment, AI-Stack can be adjusted to meet the demand.

- The platform is adaptable to different AI applications and can serve multiple use cases simultaneously.

- By efficiently managing GPU resources, AI-Stack can lower infrastructure costs. It ensures that GPUs are being used to their full potential, preventing underutilization and allowing enterprises to get the most out of their hardware investment.

- The platform allows for faster model training and deployment, reducing the time from experimentation to production.

- AI-Stack integrates well with tools and services that accelerate development workflows, allowing teams to iterate and scale AI models with greater speed.

- As AI-Stack is designed for enterprise use, it supports robust security features, ensuring the protection of sensitive data and compliance with industry regulations.

- Teams can trust that their AI operations are secure and adhere to best practices for data privacy and governance.

- Being a Solution Advisor for NVIDIA, AI-Stack is optimized for use with NVIDIA GPUs and software. This integration ensures high-performance computing capabilities, essential for AI tasks like deep learning, training large models, and handling large datasets.

- This certification provides credibility and assurance that AI-Stack is compatible with cutting-edge GPU technologies.

Shape the Future of

AI Innovation with

AI-Stack

AI development made

effortless and accessible

AI-Stack, built on Kubernetes and Docker, offers a powerful and user-friendly environment for developers to focus on model design, training, experimentation, and deployment. It integrates leading artificial neural networks (ANN) and machine learning frameworks, providing comprehensive tools for the entire process, from data preparation to model deployment. Whether on-premise or in the cloud, AI-Stack ensures a uniform development experience.

Multi-Framework Support

Offers comprehensive support for popular machine learning frameworks, including TensorFlow, PyTorch, Jupyter, Scikit-learn, and Chainer.

Convenient Environment Configuration

Effortlessly set up and manage your development environment, saving valuable time and reducing complexity.

Workflow Automation

AI-Stack boosts development efficiency with automation, streamlining the model training and deployment process for seamless operation.

Scalable and Flexible

AI Development Platform

Shaping an Open,

Robust AI Ecosystem

Seamlessly integrate with various third-party tools and platforms. Connect with a wide range of databases, data analytics tools, and cloud service platforms. Support the storage of model data in different formats and frameworks, helping you build comprehensive machine learning solutions.

Who Should Use

AI-Stack?

AI-Stack is designed for organizations and teams working on AI initiatives that require high computational power, efficient resource management, and scalable infrastructure. The following audiences would benefit from using AI-Stack.

- Companies looking to implement AI solutions at scale, manage diverse AI workloads, and optimize their GPU resources will find AI-Stack a powerful tool.

- AI-Stack helps enterprises streamline AI operations, enhance productivity, and reduce overhead costs associated with AI infrastructure management.

Data scientists and AI engineers working on complex AI models and large-scale data processing can benefit from the platform’s resource management and GPU optimization features.

Teams focused on training and deploying AI models quickly will appreciate the scalability and flexibility of AI-Stack.

- Companies providing cloud services or managing large AI infrastructures can use AI-Stack to better manage GPU resources and ensure efficient usage across multiple clients or projects.

- AI-Stack can be a useful tool for cloud providers offering GPU computing power on-demand, ensuring that their infrastructure is utilized optimally.

- Startups in the AI field that need to quickly scale their solutions without incurring huge infrastructure costs can leverage AI-Stack to optimize GPU usage and accelerate their time-to-market.

- AI-Stack allows startups to focus more on building and testing AI applications without getting bogged down by infrastructure management.

- Research teams working on AI-related projects or large-scale machine learning experiments can benefit from AI-Stack’s resource scheduling and management capabilities.

- Academic institutions can use the platform to provide GPU resources efficiently for AI research, ensuring resources are available when needed.

Computing (HPC) Needs

- Organizations with significant HPC requirements (e.g., financial services, healthcare, automotive, and manufacturing) for AI-driven analytics, simulations, or training large AI models will find AI-Stack’s ability to handle large-scale computation extremely valuable.

Effortlessly manage GPU instances

on-demand , wherever you are,

with Flexinfra AI-Stack’s secure and

scalable solution

A single platform to manage and develop AI research & development

- H100SXM Starting at MYR 31.20/GPU/Hour

- H200SXM Starting at MYR 41.10/GPU/Hour

- 4090 Ethernet Starting at MYR 1.76/GPU/Hour

- A100 NVLink Starting at MYR 19.24/GPU/Hour